Introducing AI Integration into Utility Apps

Jack Tolley - @TechTolley

JUXT - @juxtpro

Background

- Jack - Software Engineer @ JUXT

- e/acc baby!

- @TechTolley for AI

- @juxtpro for tech talks

Overview

High-level overview of LLMs and personal experience

Breakdown

- Current State - LLM topics and tidbits

- Example Features - Awesome LLM features

- Implementation - Experience with LLM styles

- Workflow Augmentation - Using LLMs for coding

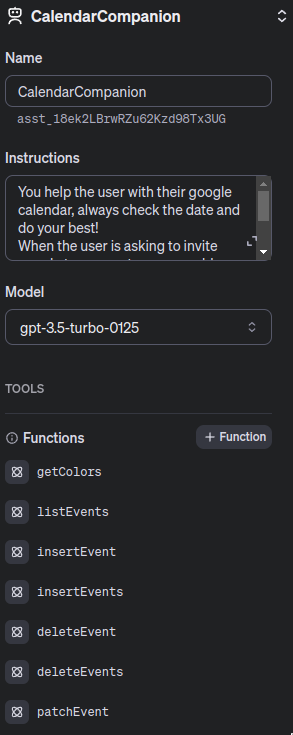

LLM Modes

Completion: Send text, get reply

Chat: String of completions

Agentic: Chat with knowledge base and tools

Commercial Models

Powerful but expensive models

- GPT-4 by OpenAI

- Gemini by Google

- Claude-3 by Anthropic

Open Source Models

Improving rapidly, customizable

- Mixtral

- LLaMa 2

Specialized Hardware

Dedicated chips for running LLMs

- Groq: 500 tokens/second

Truffle 1

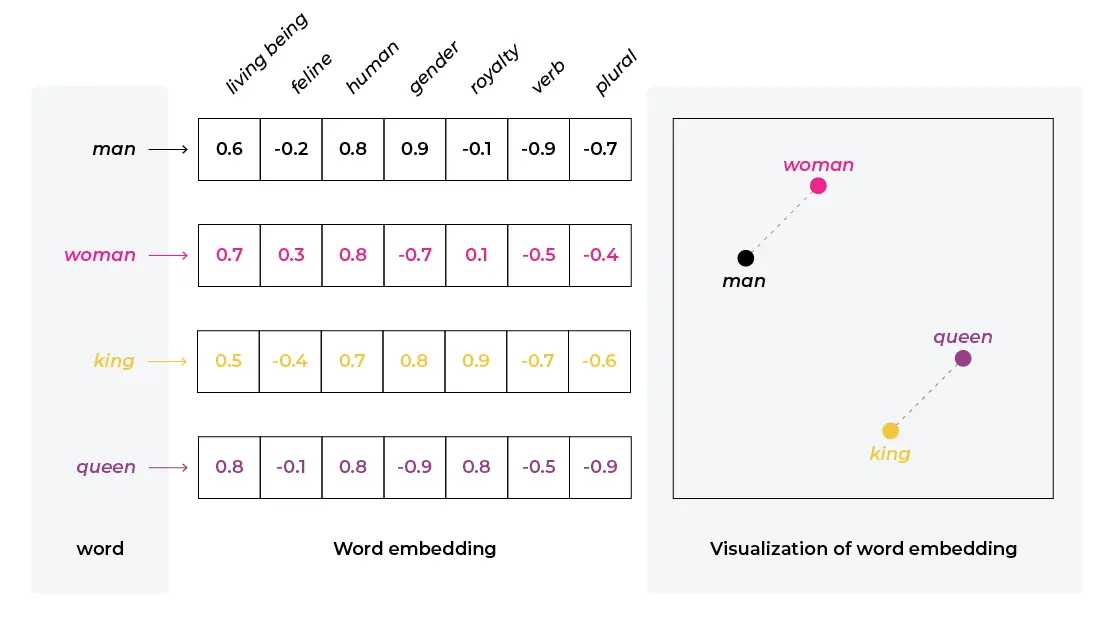

Embeddings and RAG

Capturing semantic meaning, leveraging data

Retrieval-Augmented Generation

Prompting Techniques

Optimizing prompts for better performance

- Few-shot: Provide examples

- Chain-of-thought: Coax step-by-step reasoning

- Additive: Build on existing context

Emoji Mode

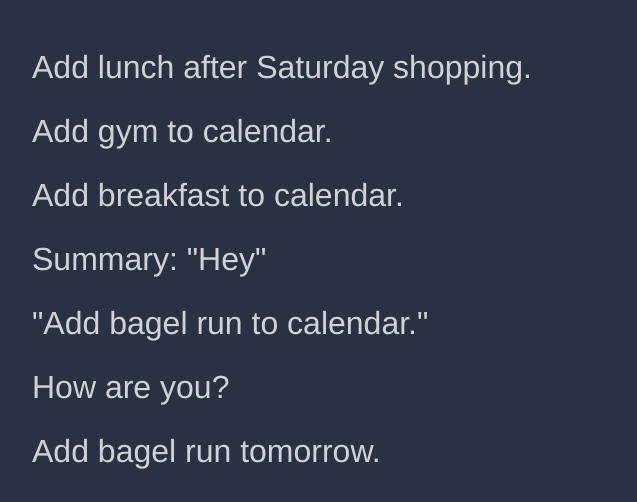

Conversation Summarization

- Generate thread titles from initial message

- Improves user experience

- Easy to implement

- Thumbs Up!

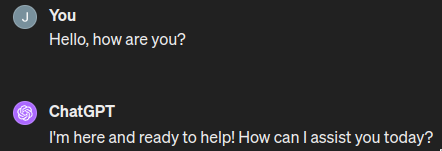

Google Calendar Agent

- Create, edit, delete, comment on events via conversation

- Powerful but challenging implementation

- Thumbs up for feature, thumbs down for usecase

Google Calendar Extension

- Stripped down version of agent

- Create events from tagged webpage text

- Extracting structured data from text

- Thumbs up

Novel YouTube Video Surfacing

- Find semantically dissimilar videos using embeddings

- Compared video transcript embeddings

- Identified least similar videos

- Useful for recommendation systems

- Thumbs up

Recommendations

- Use LLMs for completions and niche features

- Use embeddings for comparing and finding similarities

- Use LLMs for extracting structured data from text

- Working with LLM APIs is easy

Avoid AI Agents (for now)

- Slow and expensive

- Unreliable for complex tasks

- Limited model capabilities (e.g., GPT-3.5)

- Lack of insight into model's reasoning

Wrangling LLMs

Techniques for better control and results

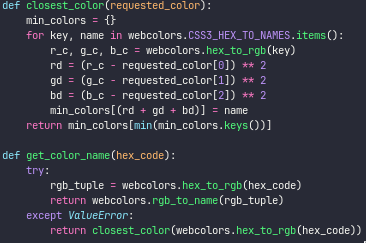

Technique 1: Treat Like a Human

Provide context and input in a human-understandable format

Example: Using color names instead of RGB values

Technique 2: Allow Flexibility

Expect some variation in output formats

Parse and convert outputs as needed

Technique 3: Pipelining

Break down tasks into multiple focused API calls

Chain models for different subtasks

Iteratively refine and improve solutions

Mega Prompt

Prompt Pipeline

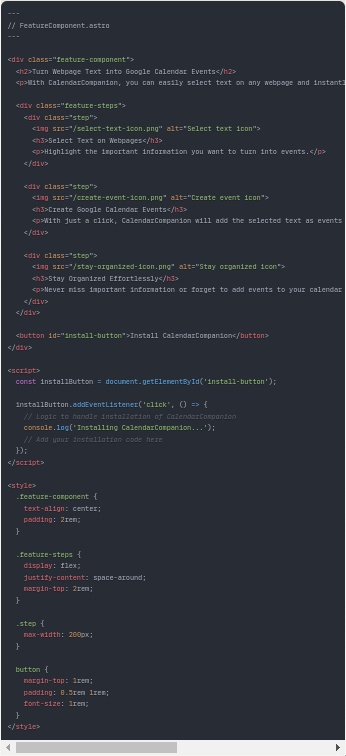

Design to HTML

Fed Figma design screenshots to ChatGPT

Generated a solid starting point

Input

Output

Render HTML

Learning New Technologies

Used LLMs to learn new frameworks, APIs, and concepts

Shortcutted documentation and sample code process

Excels at generating basic code and examples

Code Completion with Copilot

Used Copilot for intelligent code autocompletion

Generates test data, fills arrays, and more

Satisfying and time-saving experience

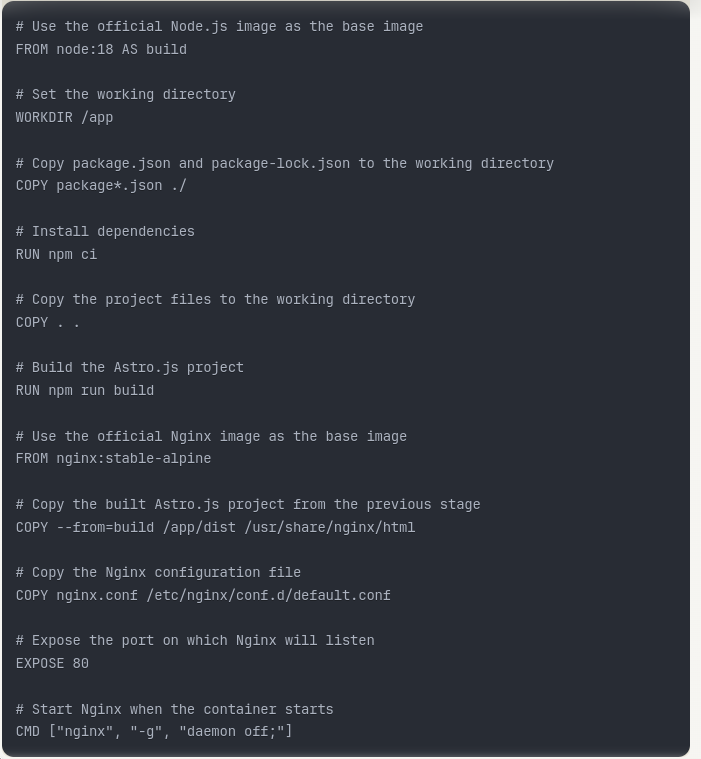

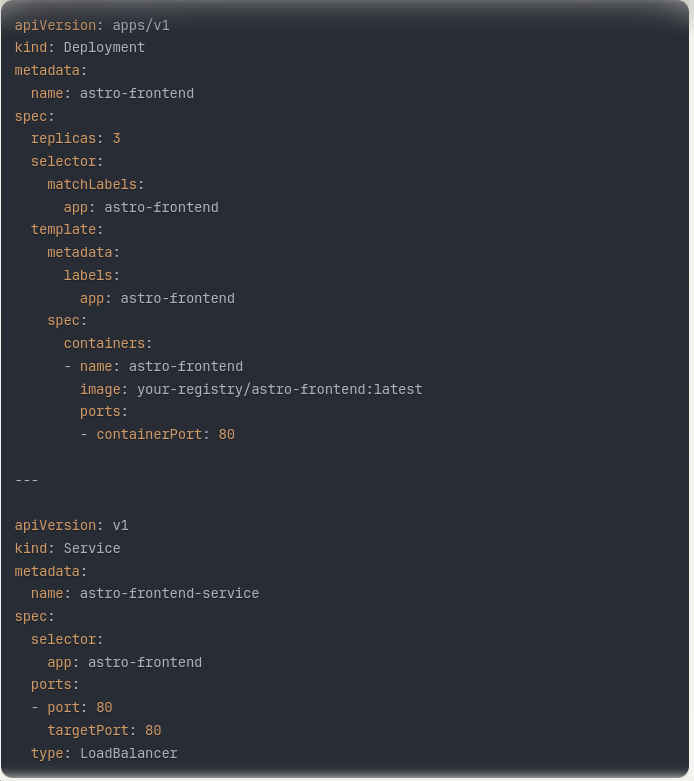

DevOps and Infrastructure

Consulted ChatGPT for DevOps tasks

Better than verbose or missing online documentation

Generates Dockerfiles, scripts, and deploy files

Neovim Integration

Integrated ChatGPT.nvim with Groq hardware

Blazing fast at 500 tokens/second

Currently free to use

Thanks!

For more experiments follow @TechTolley

For more webinars follow @juxtpro